AI governance has emerged as a critical framework for organizations to ensure responsible and ethical use of AI technologies. AI governance can be defined as the comprehensive system of principles, policies, and practices that guide the development, deployment, and management of artificial intelligence within an organization. This multifaceted approach aims to harness the immense potential of AI while mitigating associated risks and maintaining alignment with ethical standards and regulatory requirements.

The Importance of AI Governance

AI governance is essential in today's digital age. As AI systems become increasingly sophisticated and pervasive across various sectors, organizations face mounting pressure to implement robust governance structures. This pressure stems from a confluence of factors, including the need to maintain public trust, safeguard against potential misuse or abuse of AI and ensure compliance with an evolving regulatory landscape. This means AI governance should be a top priority for compliance officers, who need to integrate it into their broader corporate compliance programs. The Department’s Deputy Attorney General Lisa Monaco, has made it clear that this is an expectation in a recent speech: “And compliance officers should take note. When our prosecutors assess a company’s compliance program — as they do in all corporate resolutions — they consider how well the program mitigates the company’s most significant risks. And for a growing number of businesses, that now includes the risk of misusing AI. That’s why, going forward and wherever applicable, our prosecutors will assess a company’s ability to manage AI-related risks as part of its overall compliance efforts.”

But beyond that, effective AI governance serves as a cornerstone for sustainable innovation and competitive advantage. By establishing clear guidelines and ethical boundaries, organizations can foster an environment that encourages responsible AI development while mitigating potential legal, reputational, and operational risks. This proactive approach not only protects the organization but also builds trust with stakeholders, including customers, employees, and regulatory bodies.

AI Governance and Regulation

The regulatory restrictions surrounding AI is becoming increasingly complex and stringent. Governments and international bodies are recognizing the profound impact of AI on society and are responding with new legislation and guidelines. For instance, the European Union's proposed AI Act seeks to establish a comprehensive regulatory framework for AI systems, categorizing them based on risk levels and imposing stringent requirements on high-risk applications. In the United States, while no overarching federal AI regulation exists yet, various agencies are issuing guidance and enforcing existing laws in the context of AI. The Federal Trade Commission, for example, has signaled its intent to use its authority to address unfair or deceptive practices involving AI.

As we delve deeper into the risks associated with unauthorized and undisclosed use of AI within businesses, it becomes evident that a robust AI governance framework is not merely a luxury but a necessity for modern organizations. The following sections will explore these risks in detail and underscore the critical need for comprehensive AI governance strategies.

Risks Of Unauthorized AI Use

The unauthorized use of AI within a business context presents a myriad of risks that can have far-reaching consequences for organizations. These risks span across various domains, including data privacy, ethical considerations, and legal compliance. Let's examine some of the most pressing concerns:

Data privacy violations stand at the forefront of risks associated with unauthorized AI use. AI systems often require vast amounts of data to function effectively, and when deployed without proper oversight, they may inadvertently process or expose sensitive information in ways that violate data protection regulations such as the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA). For instance, an unauthorized AI system might collect or analyze personal data without the requisite consent, potentially leading to severe legal repercussions and erosion of customer trust.

Algorithmic bias is another significant risk that can arise from the unauthorized use of AI. When AI systems are developed or deployed without proper governance, they may perpetuate or even amplify existing biases present in training data or algorithmic design. This can lead to discriminatory outcomes in critical areas such as hiring, lending, or criminal justice, potentially violating anti-discrimination laws and ethical principles. The DOJ's guidance on corporate compliance programs emphasizes the importance of "appropriate controls" to prevent misconduct, which in the context of AI, would include measures to detect and mitigate algorithmic bias.

Intellectual property infringement is a less obvious but equally serious risk of unauthorized AI use. AI systems, particularly those involving machine learning, may be trained on datasets that include copyrighted material or proprietary information without proper licensing or permissions. This could lead to inadvertent infringement of intellectual property rights, exposing the organization to legal action and financial liabilities.

Legal And Regulatory Risks

The unauthorized use of AI can expose businesses to a host of legal and regulatory risks, which can have severe implications for the organization's operations, finances, and reputation.

Compliance violations are a primary concern when AI is used without proper authorization and governance. As regulatory frameworks evolve to address the challenges posed by AI, organizations may find themselves in breach of various laws and regulations. For example, the use of AI in financial services without appropriate controls could violate regulations such as the Fair Credit Reporting Act or the Equal Credit Opportunity Act. Similarly, unauthorized AI use in healthcare settings could potentially breach HIPAA regulations if patient data is not handled in accordance with privacy and security standards. The consequences of such compliance violations can be severe, often resulting in fines and penalties. Regulatory bodies are increasingly vigilant about AI-related infractions and are prepared to impose substantial financial penalties on organizations that fail to comply with relevant laws and guidelines. For instance, the GDPR allows for fines of up to 4% of global annual turnover for serious violations, which could amount to billions of dollars for large corporations.

Beyond immediate financial repercussions, unauthorized AI use can lead to significant reputational damage. In an era where corporate ethics and responsible technology use are under intense scrutiny, revelations of unauthorized or unethical AI deployment can severely tarnish an organization's image. This reputational harm can have long-lasting effects, eroding stakeholder trust, deterring potential customers and partners, and potentially impacting the company's market value.

Operational risks

The unauthorized use of AI within a business also presents substantial operational risks that can undermine the organization's efficiency, decision-making processes, and overall governance structure.

Uncontrolled AI deployment is a significant operational risk that arises when AI systems are implemented without proper oversight and integration into existing business processes. This can lead to a fragmented technological landscape where different departments or individuals deploy AI solutions that are not aligned with the organization's broader strategic objectives or technical standards. The result is often a patchwork of incompatible systems, data silos, and inefficiencies that can hinder rather than enhance operational performance.

The lack of accountability is another critical operational risk associated with unauthorized AI use. When AI systems are deployed without proper governance structures, it becomes challenging to attribute decisions or outcomes to specific individuals or departments. This lack of clear accountability can lead to a diffusion of responsibility, making it difficult to address issues, implement improvements, or respond effectively to incidents or errors arising from AI-driven processes.

Furthermore, unauthorized AI use can result in inconsistent decision-making across the organization. Without a centralized governance framework, different AI systems may be making decisions based on varying criteria, data sets, or algorithmic approaches. This inconsistency can lead to conflicting outcomes, inefficiencies, and potential fairness issues, particularly in areas such as customer service, resource allocation, or risk assessment.

The DOJ's guidance on corporate compliance programs emphasizes the importance of "internal reporting mechanisms" and "investigations of misconduct." In the context of AI governance, this underscores the need for robust systems to detect unauthorized AI use, investigate its impact, and implement corrective measures promptly.

As we continue to explore the implications of unauthorized and undisclosed AI use, it becomes increasingly clear that comprehensive AI governance is not just a regulatory requirement but a critical component of effective business management in the age of artificial intelligence. The next sections will delve into the consequences of undisclosed AI use and provide insights into implementing effective AI governance strategies to mitigate these risks and harness the full potential of AI technologies responsibly.

Consequences Of Undisclosed AI Use

The undisclosed use of AI within an organization presents a host of challenges that extend beyond the immediate risks of unauthorized deployment. These consequences can have far-reaching implications for a company's ethical standing, relationships with stakeholders, and competitive position in the market.

Ethical concerns are at the forefront of the consequences arising from undisclosed AI use. When an organization fails to disclose its use of AI in decision-making processes or customer interactions, it breaches the fundamental ethical principle of transparency. This lack of transparency can be seen as a form of deception, potentially violating the trust placed in the organization by its customers, employees, and other stakeholders. The DOJ's "Evaluation of Corporate Compliance Programs" emphasizes the importance of "fostering a culture of ethics and compliance." Undisclosed AI use directly contradicts this principle, as it creates an environment of secrecy and potential misconduct. Moreover, the undisclosed use of AI raises questions about informed consent. If individuals are unaware that they are interacting with or being assessed by AI systems, they are unable to make informed decisions about their engagement with the organization. This is particularly problematic in sectors where AI decisions can have significant impacts on individuals' lives, such as healthcare, finance, or employment.

The erosion of stakeholder trust is a critical consequence of undisclosed AI use. When stakeholders discover that an organization has been covertly using AI, it can lead to a significant breakdown in trust. Customers may feel deceived and question the authenticity of their interactions with the company. Employees might feel their privacy has been violated if AI has been used to monitor or evaluate their performance without their knowledge. Investors and partners may question the organization's transparency and integrity, potentially leading to withdrawn support or collaboration.

This erosion of trust can have long-lasting effects on an organization's reputation and relationships. In today's interconnected world, news of undisclosed AI use can spread rapidly, leading to public backlash and negative media coverage. The resulting damage to the company's reputation can be severe and long-lasting, affecting everything from customer loyalty to employee retention and investor confidence.

Surprisingly, undisclosed AI use can also lead to a competitive disadvantage. While organizations might initially perceive covert AI deployment as a competitive edge, the reality often proves otherwise. By not openly acknowledging and discussing their AI initiatives, companies miss out on valuable opportunities for collaboration, knowledge sharing, and industry leadership in responsible AI development. Undisclosed AI use can hinder an organization's ability to attract top talent in the AI field. Many AI professionals are deeply concerned with the ethical implications of their work and prefer to work for organizations that are transparent about their AI use and committed to responsible development practices. By keeping AI initiatives under wraps, companies may find themselves at a disadvantage in the competitive market for AI talent.

Additionally, as regulatory frameworks around AI continue to evolve, organizations that have been using AI covertly may find themselves scrambling to comply with new regulations. This reactive approach can be more costly and time-consuming than proactively developing transparent AI governance structures. In light of these consequences, it becomes clear that the undisclosed use of AI is not only ethically questionable but also potentially detrimental to an organization's long-term success and sustainability. As we move forward, it is crucial for organizations to recognize the importance of transparency in AI use and to implement robust governance frameworks that ensure responsible and ethical AI deployment.

Implementing Effective AI Governance

To address the risks and consequences associated with unauthorized and undisclosed AI use, organizations must implement effective AI governance frameworks. These frameworks should be comprehensive, adaptable, and deeply integrated into the organization's overall governance structure.

AI ethics committees play a crucial role in effective AI governance. These committees should comprise a diverse group of stakeholders, including technical experts, ethicists, legal professionals, and representatives from various business units. The primary function of an AI ethics committee is to provide oversight and guidance on the ethical implications of AI initiatives within the organization. They should be empowered to review and approve AI projects, ensuring that they align with the organization's ethical standards and values.

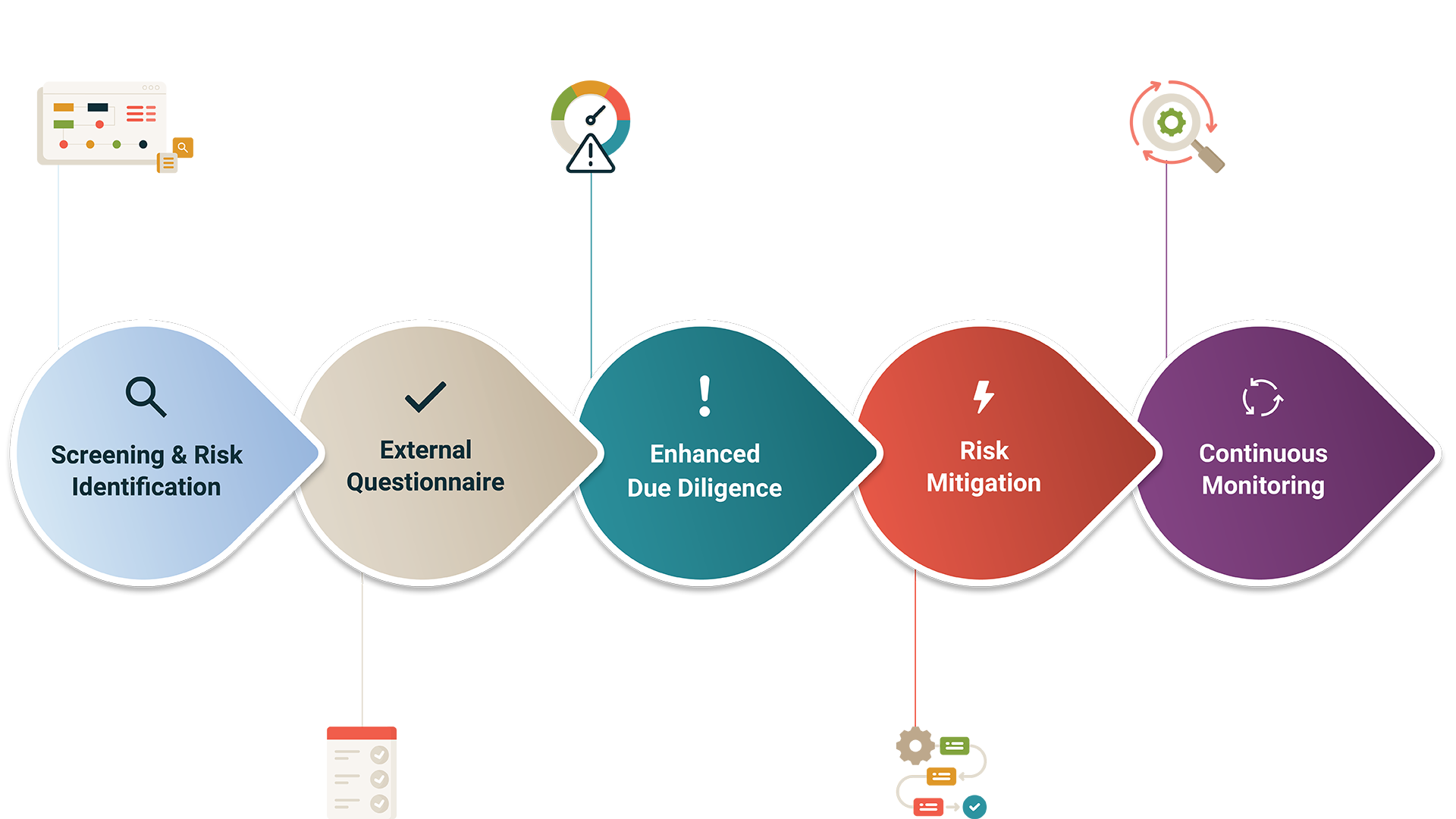

The establishment of AI risk assessment frameworks is another critical component of effective AI governance. These frameworks should provide a structured approach to identifying, evaluating, and mitigating risks associated with AI deployment. Risk assessments should consider a wide range of factors, including data privacy, algorithmic bias, security vulnerabilities, and potential societal impacts. The DOJ's guidance emphasizes the importance of risk assessment in compliance programs, stating that companies should "identify, assess, and define its risk profile." In the context of AI, this means continuously evaluating the evolving risks associated with AI technologies and their applications within the organization.

Transparency and explainability are fundamental principles that should underpin any AI governance framework. Organizations must strive to make their AI systems as transparent and explainable as possible, both internally and externally. This involves developing AI systems that can provide clear explanations for their decisions and actions, as well as implementing processes to communicate the use and impact of AI to relevant stakeholders. Transparency in AI use builds trust with stakeholders and demonstrates the organization's commitment to ethical AI practices. It also aligns with the growing regulatory emphasis on explainable AI, as seen in initiatives like the EU's proposed AI Act, which mandates human oversight and explainability for high-risk AI systems.

Policy Development And Enforcement

Effective AI governance requires the development and enforcement of comprehensive policies that guide the use of AI within the organization. These policies should be living documents, regularly updated to reflect the rapidly evolving AI landscape and regulatory environment.

AI use guidelines should clearly define the parameters for acceptable AI deployment within the organization. These guidelines should cover aspects such as data usage, model development practices, testing and validation procedures, and ethical considerations. They should also establish clear lines of responsibility and accountability for AI initiatives across different levels of the organization.

Approval processes for AI projects are crucial to ensure that all AI initiatives undergo proper scrutiny before implementation. These processes should involve multiple stakeholders, including the AI ethics committee, legal department, and relevant business units. The approval process should assess the proposed AI system's alignment with organizational policies, ethical standards, and regulatory requirements.

Monitoring and auditing mechanisms are essential to ensure ongoing compliance with AI governance policies. Regular audits of AI systems should be conducted to assess their performance, identify potential biases or issues, and ensure they continue to operate within established guidelines. Continuous monitoring can help detect unauthorized AI use and enable prompt intervention when necessary.

Training and awareness

A critical aspect of effective AI governance is ensuring that all relevant stakeholders within the organization have a proper understanding of AI technologies, their potential impacts, and the ethical considerations surrounding their use.

AI literacy programs should be developed and implemented across the organization. These programs should aim to provide employees at all levels with a basic understanding of AI technologies, their applications, and their potential risks and benefits. For technical teams involved in AI development, more advanced training should be provided on topics such as ethical AI design, bias mitigation techniques, and privacy-preserving machine learning.

Training on ethical AI practices is crucial to foster a culture of responsible AI use within the organization. This training should cover topics such as fairness, accountability, transparency, and privacy in AI systems. It should also educate employees on the potential societal impacts of AI and the importance of considering diverse perspectives in AI development and deployment.

Establishing effective reporting mechanisms is essential to enable employees to raise concerns about AI use within the organization. These mechanisms should provide clear channels for reporting potential violations of AI policies or ethical concerns related to AI systems.

By implementing these comprehensive AI governance measures, organizations can mitigate the risks associated with unauthorized and undisclosed AI use while fostering an environment of responsible innovation. Effective AI governance not only protects the organization from potential legal and reputational risks but also positions it as a leader in ethical AI practices, enhancing trust with stakeholders and contributing to long-term sustainable success in the age of artificial intelligence.

Future of AI Governance

As we look towards the horizon of artificial intelligence, it becomes increasingly clear that the landscape of AI governance is poised for significant evolution. The rapid advancement of AI technologies, coupled with growing awareness of their potential impacts, is driving a dynamic shift in how organizations, governments, and society at large approach AI governance. This section explores the key trends and challenges that will shape the future of AI governance.

The Regulatory Landscape

The evolving regulatory landscape is perhaps the most prominent factor influencing the future of AI governance. As AI technologies continue to permeate various sectors of society, governments and regulatory bodies worldwide are recognizing the need for more comprehensive and nuanced regulatory frameworks. The European Union's proposed AI Act, which aims to establish a risk-based approach to AI regulation, is a harbinger of the regulatory trends we can expect to see globally.

In the United States, while a comprehensive federal AI regulation is yet to materialize, various agencies are taking steps to address AI-related issues within their jurisdictions. The DOJ, cas for instance, already signaled that they will be updating the DOJ’s guidance on Evaluation of Corporate Compliance Programs to include assessment of disruptive technology risks — including risks associated with AI.This signals a growing recognition of AI governance as a critical component of overall corporate compliance.

We can anticipate that future regulatory frameworks will likely focus on key areas such as:

- Algorithmic transparency and explainability

- Fairness and non-discrimination in AI systems

- Data privacy and security in AI applications

- Accountability and liability for AI-driven decisions

- Human oversight and intervention in high-risk AI systems

Organizations must remain vigilant and adaptable, ready to align their AI governance frameworks with these emerging regulatory requirements.

AI Governance Standards

The development of AI governance standards is another crucial aspect shaping the future of this field. As the AI landscape matures, we can expect to see the emergence of industry-specific and cross-sector standards for responsible AI development and deployment. These standards will likely be developed through collaborations between industry leaders, academic institutions, and regulatory bodies.

The IEEE's "Ethically Aligned Design" initiative, NIST’s AI Risk Management Framework (RMF) and the ISO/IEC's ongoing work on AI standards are examples of efforts to establish common frameworks for AI governance. These standards will provide organizations with clearer guidelines for implementing ethical AI practices, conducting risk assessments, and ensuring accountability in AI systems. However, the challenge lies in creating standards that are sufficiently robust to ensure responsible AI use while remaining flexible enough to accommodate rapid technological advancements. Future AI governance standards will need to strike a delicate balance between prescriptive guidelines and principle-based approaches that can adapt to evolving AI capabilities and use cases.

AI Governance - Striking The Right Balance Between Innovation And Control

Perhaps the most significant challenge in the future of AI governance will be balancing innovation and control. As AI technologies continue to advance at a breakneck pace, organizations and regulators alike will grapple with the tension between fostering innovation and implementing necessary safeguards. On one hand, overly restrictive governance frameworks risk stifling innovation and putting organizations at a competitive disadvantage. On the other hand, insufficient controls could lead to the development and deployment of AI systems that pose significant risks to individuals and society at large.

The key to navigating this challenge will lie in developing adaptive governance frameworks that can evolve alongside AI technologies. These frameworks should:

- Encourage responsible innovation by providing clear guidelines and ethical boundaries for AI development.

- Implement tiered governance approaches that apply more stringent controls to high-risk AI applications while allowing greater flexibility for lower-risk use cases.

- Foster collaboration between industry, academia, and regulatory bodies to ensure governance frameworks are informed by both technical expertise and ethical considerations.

- Promote transparency and open dialogue about AI development and deployment, encouraging public trust and engagement in AI governance.

As we move forward, organizations must recognize that effective AI governance is not just about compliance with external regulations, but about embedding ethical considerations and responsible practices into the very fabric of AI development and deployment. The future of AI governance will require a proactive, rather than reactive, approach.

The future of AI governance presents both challenges and opportunities. Organizations that can navigate successfully, contribute to the development of robust governance standards, and strike the right balance between innovation and control will be well-positioned to harness the full potential of AI technologies responsibly and ethically. As we stand on the cusp of an AI-driven future, effective governance will be the key to ensuring that this powerful technology serves the best interests of organizations, individuals, and society as a whole.